The meaning of practical & useful quantum computing

According to some predictions, the first useful quantum computers will become available by the end of the decade. The term “useful” is commonly used to describe this next generation of quantum computers that will be fault-tolerant, and be able to solve commercially important problems that classical computers cannot. However, in order for quantum computing to make a significant impact, it is also required that they will be cost-effective and scalable for practical, commercial usage by businesses and corporations.

To understand why it is crucial that quantum computers will be “practical”, we should look back at the evolution of computers from costly, bulky industrial machines to being accessible and practical for use by businesses and individuals.

The first commercial computers such as the UNIVAC I and the IBM 701 were introduced in the early 1950’s. They were incredibly expensive and complex machines, consuming high energy and taking up entire rooms. This made them accessible only to the very few that could afford them, namely mostly government agencies, research institutes, and a few of the largest corporations.

Early computers were primarily used for specific purposes such as scientific research, cryptography, ballistic calculations, flight simulations and other military applications. The limited usability was due to the shortcomings of the available technology. For example, early computers used electronic components such as vacuum tubes for processing. The vacuum tubes were expensive and difficult to manufacture, generated a lot of heat, and required cooling systems.

Furthermore, vacuum tubes were prone to failures due to vibrations, temperature fluctuations, and power surges. This resulted in frequent breakdowns and downtime. And as early computers lacked sophisticated error detection and correction mechanisms, errors could easily go unnoticed and propagate through computations, leading to inaccurate results. Sounds familiar?

The use of commercial computers grew rapidly in the 1950s and 1960s. As chips based on transistor technology advanced and miniaturization techniques were developed, computers became smaller, more powerful, and more accessible. By the 1970s, computers were being used in a wide range of industries. They have become practical, in the sense that they were cost-effective and scalable to solve real-world problems of practical size.

Roadblocks to commercialization

Similarly, realizing the vision of commercially viable (practical), fault-tolerant (useful) quantum computers will require overcoming the challenges of miniaturization, scalability, and cost efficiency.

To correct errors and perform useful computations at scale, quantum computers will need millions of qubits. The reason is that quantum systems are incredibly sensitive to environmental disturbances such as electric and magnetic fields, heat, and vibrations, which can cause qubits to lose their quantum information, leading to errors in computations. To correct those errors, few thousands of qubits are used to maintain and protect a single “perfect” logical qubit.

So, just like in the early days of classical computing, error correction is essential to ensure that quantum computers can perform reliable computations. Otherwise, the quantum advantage is quickly lost, and the rapid accumulation of errors prevents the implementation of powerful, long algorithms for solving complex problems that are beyond the capabilities of classical computers.

With existing techniques, a quantum computer with millions of qubits will need a massive infrastructure of multiple ultrafast feed-forward control systems, along with complex cooling systems, and other supporting equipment. Hence, the so-called next generation of quantum computers, which are currently under development, will likely be much bigger than even the room-size, bulky computers of the 1950’s. In fact, some of these computers will have a “football court-size” .

Given the power and footprint requirements of current architectures, the first fault tolerant, commercially viable quantum computers will be highly expensive, complex, and require specialized expertise. As such, it is likely that only well-funded research institutions and governments will be able to afford and benefit from them, without wide spread commercial usage by businesses.

So just like their classical counterparts, quantum computers will reach a tipping point where the realization of practical applications depends on the ability to minimize the resource overhead in large scale computations.

Addressing photonic quantum computing challenges

Overcoming the resource overhead problem to make quantum computing both useful and practical is a major challenge for existing technologies. Among them, photonics offers the most promising route towards useful, large-scale fault-tolerant quantum computers. One of the main advantages of using photons as qubits is the ability to create a modular and scalable architecture with high connectability, which seems to be the main obstacle for matter-based qubits.

In the photonics approach, resource-state generators (RSG) are used to create clusters of entangled photons that can be stitched into a fabric of millions of qubits using switches and simple optical fibers serving as a huge quantum memory.

While this capability can be used to repeatedly generate multiple photonic qubits, generating even small clusters of entangled photons involves substantial challenges. The reason is that the conventional approach involves multiple probabilistic and inefficient attempts for both the generation and the entanglement of the photons and a huge amount of resources is required in order to overcome the low success probability of this process.

In fact, using existing technologies, each photon in the entangled cluster requires thousands of attempts before getting a success. Accordingly, a fault-tolerant quantum computer with millions of qubits must be built with massive redundancy of single-photon sources and entangling circuits, requiring multiple, massive RSGs that take up the space of an entire factory floor.

Introducing the photon-atom gates architecture

To solve the resource overhead problem in photonic implementations, Quantum Source has developed an innovative photon-atom gates architecture. This architecture combines the relative advantages of photons (modularity, scalability and connectivity) with the drastic advantages of atoms – the ability to deterministically generate single photons and entangle them.

Our approach addresses the key challenge in realizing fault-tolerant, large scale quantum computers – generating the clusters of entangled qubits that are the crucial “fuel” of the quantum computation.

To tackle this challenge, our system is designed to allow for deterministic interaction between the atom and the photon emitted from it, leveraging advanced Cavity Quantum Electrodynamics (Cavity-QED) techniques for enhancing light-matter interactions by focusing light into micron-scale optical resonators on a photonic chip. The result is tight confinement conditions in which the electric field associated even with a single photon is strong enough to allow deterministic interaction between atoms and photons.

This technique facilitates valuable atom-mediated quantum operations, including single-photon generation and photon-atom quantum logic gates. It allows for using only a small number of trapped atoms on each chip to generate and entangle the very large number of photons required for fault- tolerant photonic quantum computing.

The deterministic nature of the photon-atom quantum logic gates minimizes the need for costly and complex feed-forward and switching operations that are necessary in other implementations. The result is a practical design for quantum computers with small footprint and low power consumption that can support millions of photonic qubits in a scalable platform.

The next-next generation of quantum computing

History tends to repeat itself, and the field of computing is no exception.

The current stage in the evolution of quantum computing is very reminiscent of the first commercial computers, characterized by rapid advancements yet limited practical applications. While prominent quantum computing players have demonstrated a path to building such systems, their probabilistic nature creates very high overheads that significantly degrade their cost-effectiveness.

These first commercial quantum computers will indeed be more useful, marking a significant milestone in the evolution of quantum computers towards practical applications. However, they will probably not usher in a revolution like that of chip-based computers, which led to the widespread adoption of computers, and the creation of an entire ecosystem of applications around them.

Just like in the transition from vacuum tubes to chips, the real quantum revolution will begin when quantum computers become affordable and cost-effective to scale - in other words, “practical”. While there are still significant challenges ahead, we believe that our approach will pave the way for practical, fault-tolerant quantum computers that are scalable, compact, dramatically cheaper, and enable wide usage for commercial applications.

categories

popular

P-Atom-Mediated Deterministic Generation and Stitching of Photonic Graph States

How single atoms can solve the most demanding challenge in photonic quantum computation: deterministic generating of graph states

P-A passive photon-atom qubit SWAP gate

The first experimental demonstration of a qubit SWAP gate between two different types of qubits.

Similar

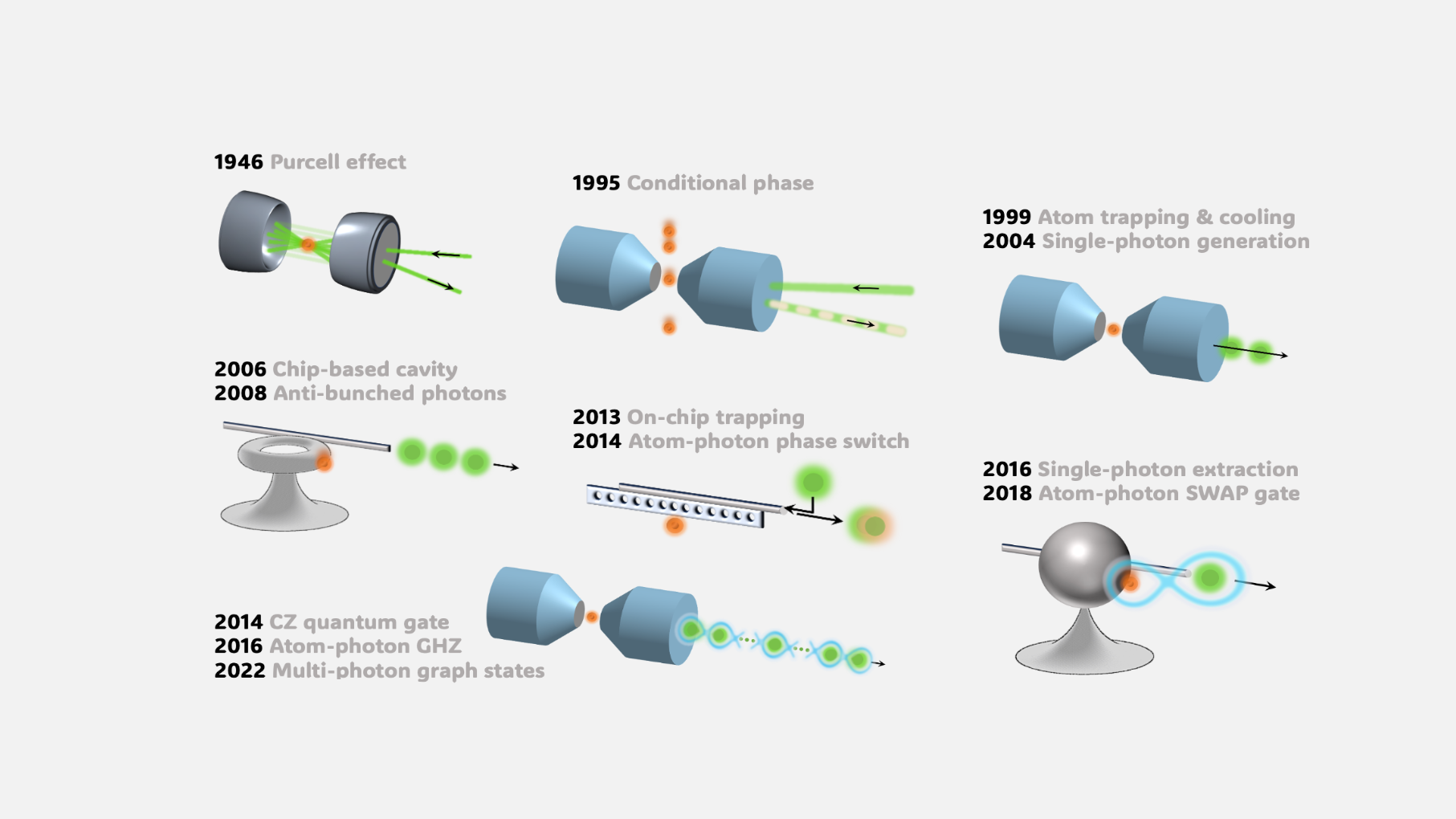

Controlling photons with atoms in a cavity: from bulk to integrated systems

This survey explores the evolution of cavity quantum electrodynamics (QED) concepts and technology over the years, transitioning from bulk optical structures and transient atoms to integrated fabrication-based resonators and stationary atoms.

Quantum information processing with photonic and atomic qubits

The interaction between photonic and matter qubits represents an exciting and promising avenue for the development of quantum computers.

popular

V-Quantum Frontiers: National Strategy, Global Impact

At QWC 2025, former Prime Minister of Israel and Quantum Source Board Member Naftali Bennett joined Preston Dunlap, former inaugural CTO and Chief Architect of the U.S. Space Force & Air Force and founder of Arkenstone Ventures, for a timely discussion on how nations and companies can navigate the pivot from promise to practical quantum capability.

V-Beyond the Qubit Wall: Scaling Photonic Computing with Atoms

At QWC 2025, Quantum Source laid out how photonics could leap from probabilistic lab demos to room-temperature, fault-tolerant systems. In a tightly argued talk, CEO Oded Melamed described a hybrid approach that couples single photons with single atoms on one platform, aiming to make resource-state generation deterministic and compact enough for a standard server room.

V-Making Photonic Quantum Computation Scalable Using Single Atoms

At Q2B Tokyo 2025, our CEO Oded Melamed presented “Making Photonic Quantum Computation Scalable Using Single Atoms,” explaining how our photonic-based approach addresses key challenges to enable scalable quantum computing.

popular

N-Quantum Source Delivers Practical Photonic Quantum Computing

In this Forbes exclusive interview, published just ahead of Quantum World Congress, Gil Press highlights how Quantum Source’s atom-photon technology is paving the way toward scalable, fault-tolerant photonic quantum computing.

N-Quantum Source Unveils ‘ORIGIN’ - A Noval Core Engine For Scalable, Fault-Tolerant Photonic Quantum Computers

ORIGIN’s vision and concept will be presented at the Quantum World Congress (QWC) in Washington, D.C. on September 17, 2025, in a keynote session featuring Former Israeli Prime Minister Naftali Bennett, a member of the company’s Board, and Quantum Source Co-Founder and CEO Oded Melamed.

N-Exclusive: Prof. Barak Dayan on Quantum Source’s Photon-Atom Breakthrough in Quantum Computing

In this TQI exclusive interview, Prof. Barak Dayan, Chief Scientist at Quantum Source, shares how their photon-atom technology is tackling some of the toughest challenges in quantum computing.